When we finally upgraded our Rails application to v5, plans were put in place to take advantage of its WebSockets capabilities.

Locally on development machines, things seem to chug along smoothly until it all broke down on our staging stack.

Here’s the basic setup:

* Rails 5.0.x

* Ember 2.x (using Ember Cable for WebSockets)

* Puma

* Nginx (for reverse proxying Ember calls to Rails + serving Ember app)

* AWS ElastiCache Redis

* Postgres hosted on AWS RDS

* EC2 instance + ELB + custom security group… etc.

* Entire stack is orchestrated with Chef running on AWS OpsWorks

I didn’t think the set up was anything out of the ordinary per se. The only exception is probably my choice of using ElastiCache instead of having an EC2 server running our own Redis instance (or having it hosted at Redis Labs or something). But other than that, it’s pretty vanilla.

Immediately we rant into trouble having Ember making a proper connection. We kept seeing this error:

WebSocket connection to 'ws://staging.domain.name/cable' failed: Connection closed before receiving a handshake response

First thing first, we had to make sure our /cable endpoint was proxied properly so that Rails can answer the calls. So in our main Nginx config file, we added:

1

2

3

4

5

6

7

8

9

10

| location /cable {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $http_host;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "Upgrade";

proxy_redirect off;

proxy_http_version 1.1;

proxy_pass http://app_server;

} |

It still didn’t quite work.

1

2

3

4

5

| I, [2017-03-22T18:46:39.166623 #4175] INFO -- : Started GET "/cable" for 127.0.0.1 at 2017-03-22 18:46:39 +0000

I, [2017-03-22T18:46:39.167699 #4175] INFO -- : Started GET "/cable/" [WebSocket] for 127.0.0.1 at 2017-03-22 18:46:39 +0000

E, [2017-03-22T18:46:39.167837 #4175] ERROR -- : Request origin not allowed: https://staging.domain.name

E, [2017-03-22T18:46:39.167950 #4175] ERROR -- : Failed to upgrade to WebSocket (REQUEST_METHOD: GET, HTTP_CONNECTION: Upgrade, HTTP_UPGRADE:)

I, [2017-03-22T18:46:39.168064 #4175] INFO -- : Finished "/cable/" [WebSocket] for 127.0.0.1 at 2017-03-22 18:46:39 +0000 |

Obviously we need to specify in Rails that our Staging subdomain, along with its https protocol should be allowed. So we added that to our Rails /config/environments/staging.rb file.

config.action_cable.allowed_request_origins = %w(https://staging.domain.name staging.domain.name)

Once we squared that away, we were still left with that dreadful error on line #4 above.

Failed to upgrade to WebSocket (REQUEST_METHOD: GET, HTTP_CONNECTION: Upgrade, HTTP_UPGRADE:)

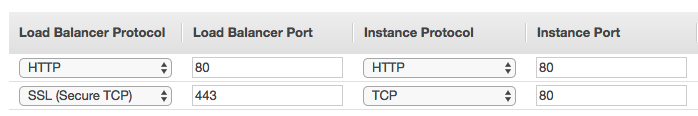

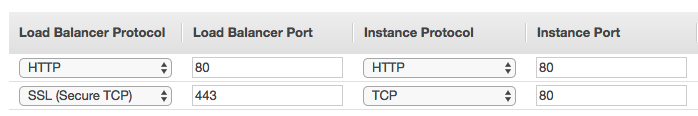

Somehow, the $http_upgrade variable in Nginx is simply not being set despite browser’s request. As it turns out, WebSocket traffic isn’t quite HTTP/HTTPS. The devil is in the details of the AWS ELB (load balancer).

I simply swapped out HTTPS with SSL. And BOOM! Like that, it worked:

Successfully upgraded to WebSocket (REQUEST_METHOD: GET, HTTP_CONNECTION: Upgrade, HTTP_UPGRADE: websocket)

Now connection was being made properly. Except for this one little thing: The browser had to keep reinitialize the connection every few seconds. And we noticed the UI simply wasn’t being updated despite changes to data. Sigh…

Next stop was to test if the EC2 instance itself was actually talking to AWS ElastiCache. There are a couple of ways to try this:

In Rails:

1

2

3

4

5

| irb(main):001:0> redis = Redis.new(:host => 'my-elasticache-instance-identifier.0001.usw2.cache.amazonaws.com', :port => 6379)

=> #< redis client v3.3.3 for redis://my-elasticache-instance-identifier.0001.usw2.cache.amazonaws.com:6379/0>

irb(main):002:0> redis.ping

Redis::CannoConnectError: Error connecting to Redis on my-elasticache-instance-identifier.001.usw2.cache.amazonaws.com:6379 (Redis::TimeoutError)

... |

Using Telnet:

1

2

3

| $ telnet my-elasticache-instance-identifier.0001.usw2.cache.amazonaws.com 6379

Trying /ip ADDRESS/...

# eventually times out |

OMFG. WHAT ELSE IS WRONG NOW??!!

As it turns out, the devil, again, is in the details. This time, it’s in AWS Security Groups.

AWS resources are only allowed to talk to each other if said resources are within the same security groups (among other things). In this case, AWS ElastiCache has a default security group, which we’re not allowed to change (not to my knowledge anyway). So the trick was to make sure that our OpsWorks Layers also have been assigned the default security group. Now, OpsWorks documentation says that you must reboot each instance within the layer for the new security group settings to kick in. But the truth is I had to spawn brand new instances for the new security group to stick. But once that was done, everything finally started to gel:

1

2

3

4

5

6

| irb(main):001:0> redis = Redis.new(:host => 'my-elasticache-instance-identifier.0001.usw2.cache.amazonaws.com', :port => 6379)

=> #<redis client v3.3.3 for redis://my-elasticache-instance-identifier.0001.usw2.cache.amazonaws.com:6379/0>

irb(main):002:0> redis.ping

=> "PONG"

...

</redis> |

I hate technology sometimes.

References:

* https://blog.jverkamp.com/2015/07/20/configuring-websockets-behind-an-aws-elb/ (cached in PDF)

While googling for some syntax on

While googling for some syntax on